Individual Project_Herman

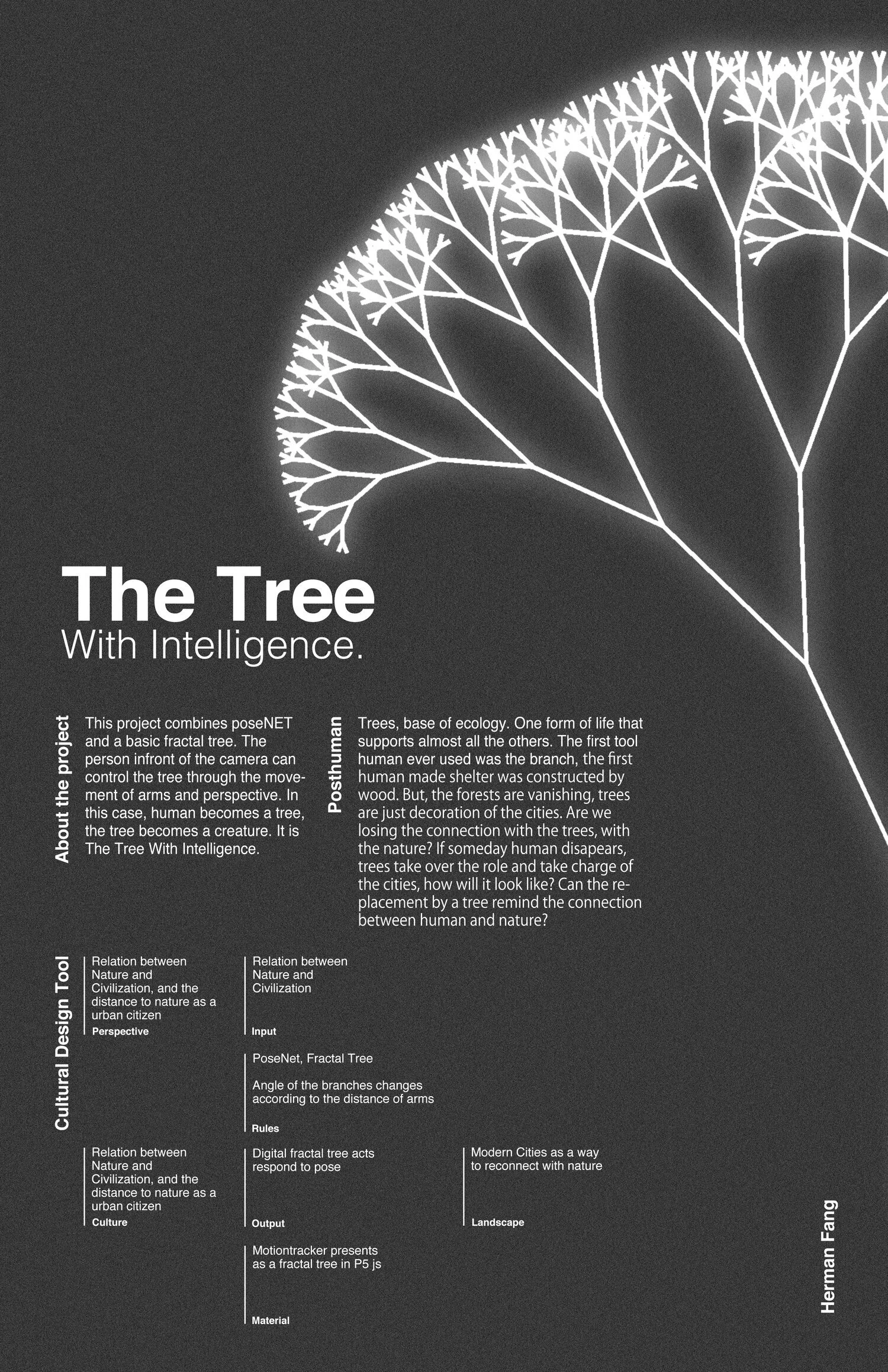

The Tree With Intelligence

From the Beginning

How can AI. and machine learning from a project that will cover the canvas of cultural and posthuman? It was a vague concept for me at the beginning of this project. It should be interesting, it should be eye-catching, it should be AR, it should be in 3D. The combinations of ideas have hundreds, thousands of possibility and it was really a pleasure just to imagine what could I make. What did I get? Here are the FIVE ORIGINS.

1. The gestural control of moving from the movie “Her“

2. A digital self parking car

3. AR. Mario with scenes build with the shadow of real life objects

4. 3d facial model generator

5. A tree generator based on real-life scenes

What I finally decided to work on is the 5th idea since it was the most dense and brainstormed one.

It was quite a story about how did I came up with it.

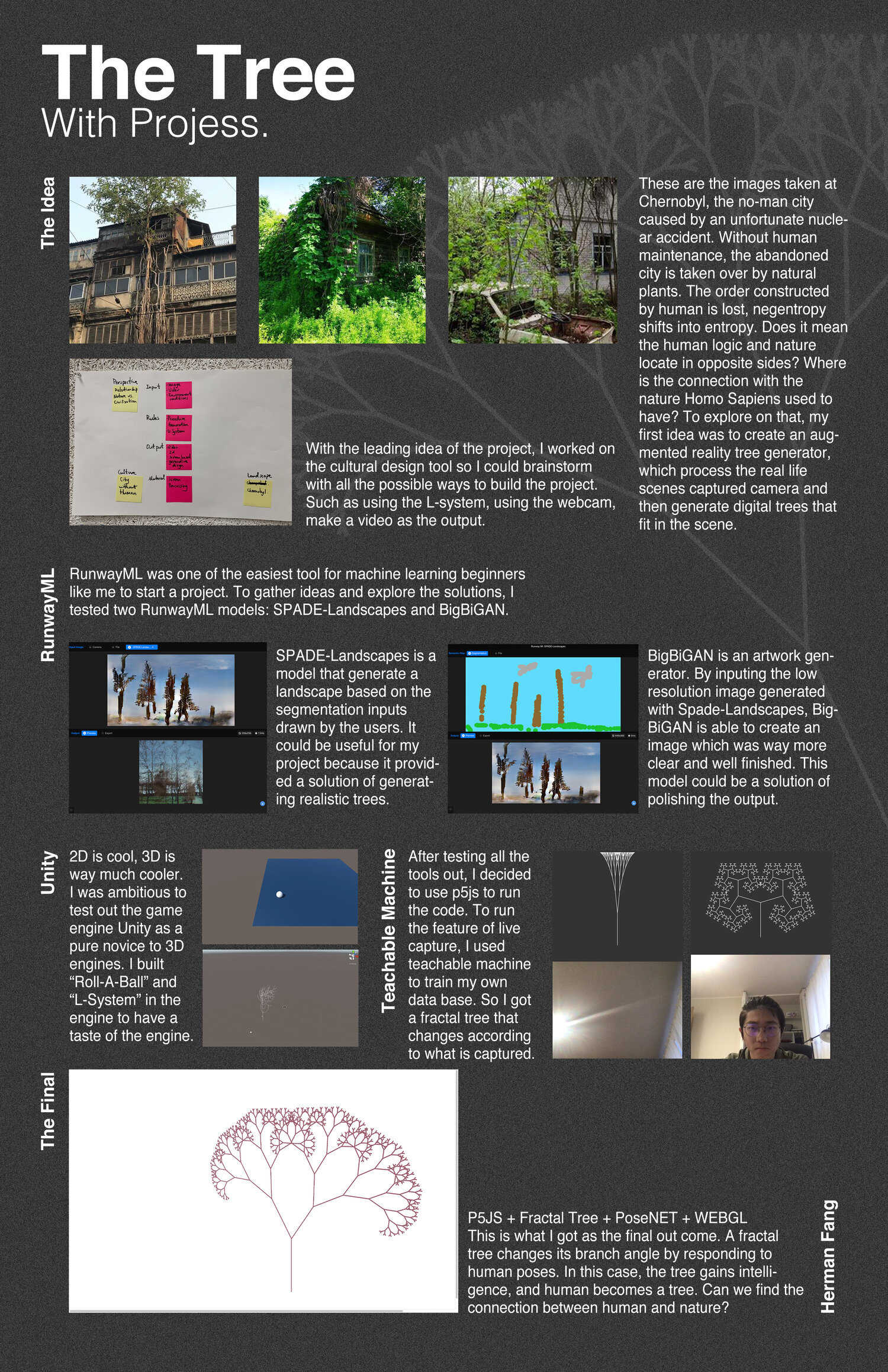

These are the images taken at Chernobyl, the no-man city caused by an unfortunate nuclear accident. Without human maintenance, the abandoned city is taken over by natural plants. The order constructed by human is lost, negentropy shifts into entropy. Does it mean the human logic and nature locate in opposite sides? Where is the connection with the nature Homo Sapiens used to have? To explore on that, my first idea was to create an augmented reality tree generator, which process the real life scenes captured camera and then generate digital trees that fit in the scene.

With the leading idea of the project, I worked on the cultural design tool so I could brainstorm with all the possible ways to build the project. Such as using the L-system, using the webcam, make a video as the output.

The first try with RUNwayML

RunwayML was one of the easiest tool for machine learning beginners like me to start a project. To gather ideas and explore the solutions, I tested two RunwayML models: SPADE-Landscapes and BigBiGAN.

SPADE-Landscapes is a model that generate a landscape based on the segmentation inputs drawn by the users. It could be useful for my project because it provided a solution of generating realistic trees.

BigBiGAN is an artwork generator. By inputing the low resolution image generated with Spade-Landscapes, BigBiGAN is able to create an image which was way more clear and well finished. This model could be a solution of polishing the output.

However, the RunwayML models were not perfect. WebCam could not be used as an input for SPADE-Landscapes, therefore the concept of live capture won’t be functional using the RunwayML models. Therefore, I switched to other explorations.

The Second Run With L-System

2D is cool, 3D is way much cooler.

I was ambitious to test out the game engine Unity as a pure novice to 3D engines. I tried to build the simplest game scene of “Roll-A-Ball“ to have a taste of the engine.

Next, I started to build a L-System tree in Unity by following the tutorial of Richard Hawkes (https://www.youtube.com/watch?v=uBEA6VSUybk). Although the result was satisfying, the coding was stressful for me so it was an interesting attempt but won’t be the technology for the final outcome.

Code:

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class LSystemGenerator : MonoBehaviour

{

private string axiom = "F";

private float angle;

private string currentString;

private Dictionary<char, string> rules = new Dictionary<char, string>();

private Stack<TransformInfo> transformStack = new Stack<TransformInfo>();

private float length;

private bool isGenerating = false;

// Start is called before the first frame update

void Start()

{

rules.Add('F', "FF+[+F-F-F]-[-F+F+F]");

currentString = axiom;

angle = 30f;

length = 5f;

StartCoroutine(GenerateLSystem ());

}

// Update is called once per frame

void Update()

{

}

IEnumerator GenerateLSystem()

{

int count = 0;

while (count < 5)

{

if (!isGenerating)

{

isGenerating = true;

StartCoroutine (Generate ());

}

else

{

yield return new WaitForSeconds(0.1f);

}

}

}

IEnumerator Generate()

{

length = length / 2f;

string newString = "";

char[] stringCharacters = currentString.ToCharArray ();

for (int i = 0; i < stringCharacters.Length; i++)

{

char currentCharacter = stringCharacters [i];

if (rules.ContainsKey (currentCharacter))

{

newString += rules [currentCharacter];

} else {

newString += currentCharacter.ToString ();

}

}

currentString = newString;

Debug.Log(currentString);

stringCharacters = currentString.ToCharArray();

for (int i = 0; i < stringCharacters.Length; i++)

{

char currentCharacter = stringCharacters[i];

if (currentCharacter == 'F')

{

Vector3 initialPosition = transform.position;

transform.Translate (Vector3.forward * length);

Debug.DrawLine (initialPosition, transform.position, Color.white, 10000f, false);

yield return null;

} else if (currentCharacter == '+')

{

transform.Rotate (Vector3.up * angle);

} else if (currentCharacter == '-')

{

transform.Rotate (Vector3.up * -angle);

} else if (currentCharacter == '[')

{

TransformInfo ti = new TransformInfo();

ti.position = transform.position;

ti.rotation = transform.rotation;

transformStack.Push(ti);

} else if (currentCharacter == ']')

{

TransformInfo ti = transformStack.Pop();

transform.position = ti.position;

transform.rotation = ti.rotation;

}

}

isGenerating = false;

}

}

Close to the Final

After testing all the tools out, I decided to use p5js to run the code. To run the feature of live capture, I used teachable machine to train my own data base. Thanks to Daniel Shiffman’s code and his coding train videos, I learned the code for fractal tree. With these two combined I got a fractal tree that changes depend on what is captured. For example, when a person is captured, the variable for branch angles is 5; when a light is captured, the variable for branch angles is 1.

Code:

<div>Teachable Machine Image Model - p5.js and ml5.js</div>

<script src="https://cdnjs.cloudflare.com/ajax/libs/p5.js/0.9.0/p5.min.js"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/p5.js/0.9.0/addons/p5.dom.min.js"></script>

<script src="https://unpkg.com/ml5@0.4.3/dist/ml5.min.js"></script>

<script type="text/javascript">

var angle = 0;

// Classifier Variable

let classifier;

// Model URL

let imageModelURL = 'https://teachablemachine.withgoogle.com/models/TrshwMRe/';

// Video

let video;

let flippedVideo;

// To store the classification

let label = "";

// Load the model first

function preload() {

classifier = ml5.imageClassifier(imageModelURL + 'model.json');

}

function setup() {

createCanvas(400, 400);

// Create the video

video = createCapture(VIDEO);

video.size(400, 400);

//video.hide();

flippedVideo = ml5.flipImage(video)

// Start classifying

classifyVideo();

}

function draw() {

background(51);

// Draw the video

// image(flippedVideo, 0, 0);

angle = label;

stroke(255);

translate(200, height);

branch(100);

// Draw the label

fill(255);

textSize(16);

textAlign(CENTER);

text(label, width / 2, height - 4);

}

// Get a prediction for the current video frame

function classifyVideo() {

flippedVideo = ml5.flipImage(video)

classifier.classify(flippedVideo, gotResult);

}

// When we get a result

function gotResult(error, results) {

// If there is an error

if (error) {

console.error(error);

return;

}

// The results are in an array ordered by confidence.

// console.log(results[0]);

label = results[0].label;

// Classifiy again!

classifyVideo();

}

function branch(len) {

line(0, 0, 0, -len);

translate(0, -len);

if (len > 4) {

push();

rotate(angle);

branch(len * 0.67);

pop();

push();

rotate(-angle);

branch(len * 0.67);

pop();

}

//line(0, 0, 0, -len * 0.67);

}

</script>

The END

P5JS + Fractal Tree + PoseNET + WEBGL

This is what I got as the final out come. A fractal tree changes its branch angle by responding to human poses.

In this case, the tree gains intelligence, and human becomes a tree. Can we find the connection between human and nature?

Links to the project:

https://editor.p5js.org/wangyu.fang@cca.edu/full/j_CmnlcL7

https://editor.p5js.org/wangyu.fang@cca.edu/sketches/j_CmnlcL7

Code:

var angle = 0;

let video;

let poseNet;

let leftX = 0;

let leftY = 0;

let rightX = 0;

let rightY = 0;

let noseX = 0;

let noseY = 0;

function setup() {

createCanvas(1920, 1080, WEBGL);

video = createCapture(VIDEO);

video.hide();

poseNet = ml5.poseNet(video, modelReady);

poseNet.on('pose', gotPoses);

}

function gotPoses(poses) {

// console.log(poses);

if (poses.length > 0) {

let lX = poses[0].pose.keypoints[9].position.x;

let lY = poses[0].pose.keypoints[9].position.y;

let rX = poses[0].pose.keypoints[10].position.x;

let rY = poses[0].pose.keypoints[10].position.y;

let nX = poses[0].pose.keypoints[0].position.x;

let nY = poses[0].pose.keypoints[0].position.y;

leftX = lerp(leftX, lX, 0.5);

leftY = lerp(leftY, lY, 0.5);

rightX = lerp(leftX, rX, 0.5);

rightY = lerp(rightY, rY, 0.5);

noseX = lerp(noseX, nX, 0.5);

noseY = lerp(noseY, nY, 0.5);

}

}

function modelReady() {

console.log('model ready');

}

function draw() {

background(255);

camera(-(Math.round(noseX-320)), 0, -500, 0, 200, 0, 0, 1, 0)

let d1 = dist(leftX, leftY, rightX, rightY);

let d2 = dist(leftX, leftY, noseX, noseY);

let d3 = dist(rightX, rightY, noseX, noseY)

console.log(Math.round(noseX-320))

angle = d1/300;

stroke(d1, d2/2, d3/2);

strokeWeight(2);

translate(0,height/2);

branch(100);

}

function branch(len) {

line(0, 0, 0, -(len*2));

translate(0, -(len*2));

if (len > 4) {

push();

rotate(angle);

branch(len * 0.67);

pop();

push();

rotate(-angle);

branch(len * 0.67);

pop();

}

}

function mousePressed() {

let fs = fullscreen();

fullscreen(!fs);

}