Facial Micro-Expression For Autistic Children

Jiaxin He

Introduction

My personal project is about facial micro-expression of autistic children. Through my research, I found autistic children have two disorders. One is the inability to recognize other people's expressions. Another is the inability to make the right expression. I chose the first direction as my further research direction and personal project.

RESEARCH

People with autism sometimes give ambiguous looks

Facial expressions smooth social interactions: A smile may show interest, a frown empathy. People with autism have difficulty making appropriate facial expressions at the right times, according to an analysis of 39 studies1. Instead, they may remain expressionless or produce looks that are difficult to interpret…

Facial expression recognition as a candidate marker for autism spectrum disorder: how frequent and severe are deficits?

Background

Impairments in social communication are a core feature of Autism Spectrum Disorder (ASD). Because the ability to infer other people’s emotions from their facial expressions is critical for many aspects of social …

Kids with autism struggle to read facial expressions

(Reuters Health) - Children with autism may have a harder time reading emotions on people’s faces than other kids, but they also misunderstand the feelings they see in a way that’s pretty similar to youth without autism, a small study suggests…

DATASET

SMIC - Spontaneous Micro-expression Database

Micro-expressions are important clues for analyzing people's deceitful behaviors. So far the lack of training source has been hindering research about automatic micro-expression recognition, and SMIC was developed to fill this gap. SMIC database includes spontaneous micro-expressions elicited by emotional movie clips. Emotional movie clips that can induce strong emotion reactions were shown to subjects, and subjects were asked to HIDE their true feelings while watching movie clips. If they failed to do so they will have to fill in a long boring questionnaire as punishment. This kind of setting is to create a high-stake lie situation so that micro-expressions can be induced…

Impaired detection of happy facial expressions in autism

The detection of emotional facial expressions plays an indispensable role in social interaction. Psychological studies have shown that typically developing (TD) individuals more rapidly detect emotional expressions than neutral expressions…

Detecting Micro-Expressions in Real Time Using High-Speed Video Sequences

Automatic facial expression analysis has been extensively studied in the last decades, as it has applications in various multidisciplinary domains, ranging from behavioral psychology, human-computer interaction, deceit detection, just to name a few. In the last years, a new research field has drawn the attention of computer vision researchers: micro-expression analysis....

FIRST TRY

CODE

Design Tool

USING TEACHABLE MACHINE

SCREENSHOT OF P5.JS

<div>Teachable Machine Image Model - p5.js and ml5.js</div>

<script src="https://cdnjs.cloudflare.com/ajax/libs/p5.js/0.9.0/p5.min.js"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/p5.js/0.9.0/addons/p5.dom.min.js"></script>

<script src="https://unpkg.com/ml5@0.4.3/dist/ml5.min.js"></script>

<script type="text/javascript">

// Classifier Variable

let classifier;

// Model URL

let imageModelURL = 'https://teachablemachine.withgoogle.com/models/DONxWTrj/';

// Video

let video;

let flippedVideo;

// To store the classification

let label = "";

// Load the model first

function preload() {

classifier = ml5.imageClassifier(imageModelURL + 'model.json');

}

function setup() {

createCanvas(320, 260);

// Create the video

video = createCapture(VIDEO);

video.size(320, 240);

video.hide();

flippedVideo = ml5.flipImage(video)

// Start classifying

classifyVideo();

}

function draw() {

background(0);

// Draw the video

image(flippedVideo, 0, 0);

// Draw the label

fill(255);

textSize(16);

textAlign(CENTER);

text(label, width / 2, height - 4);

}

// Get a prediction for the current video frame

function classifyVideo() {

flippedVideo = ml5.flipImage(video)

classifier.classify(flippedVideo, gotResult);

}

// When we get a result

function gotResult(error, results) {

// If there is an error

if (error) {

console.error(error);

return;

}

// The results are in an array ordered by confidence.

// console.log(results[0]);

label = results[0].label;

// Classifiy again!

classifyVideo();

}

</script>FINAL PROJECT

CODE

CULTURE DESIGN TOOL

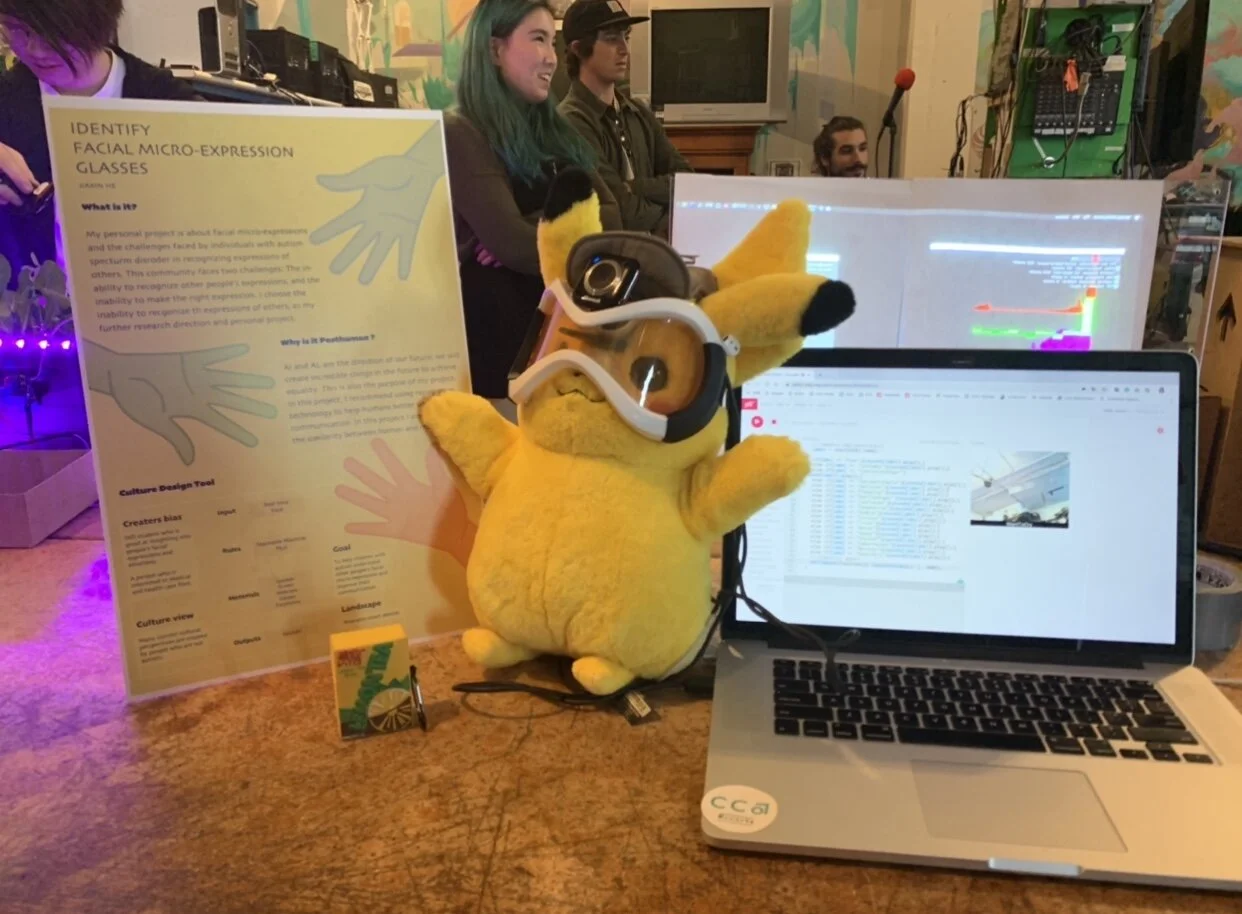

POSTER

FINAL SHOW

// Classifier Variable

let classifier;

// Model URL

let imageModelURL = 'https://teachablemachine.withgoogle.com/models/-YunFq8e/';

// Video

let video;

let flippedVideo;

// To store the classification

let label = "";

// Load the model first

function preload() {

classifier = ml5.imageClassifier(imageModelURL + 'model.json');

sounds = {

Fear: loadSound("fear.mp3"),

Contempt: loadSound("contempt.mp3"),

ControlledAnger: loadSound("controlled anger.mp3"),

SarcasticSmile: loadSound("sarcastic smile.mp3"),

Derision: loadSound("derision.mp3"),

Pleading: loadSound("pleading.mp3"),

BaffledAnger: loadSound("baffled anger.mp3"),

Confusion: loadSound("confusion.mp3"),

DeepHatred: loadSound("deep hatred.mp3"),

Humble: loadSound("humble.mp3"),

Curious: loadSound("curious.mp3"),

Tired: loadSound("tired.mp3"),

Bored: loadSound("bored.mp3"),

Happy: loadSound("happy.mp3"),

Shocked: loadSound("shocked.mp3"),

Embarrassed: loadSound("embarrassed.mp3"),

Nervous: loadSound("nervous.mp3"),

WantToCry: loadSound("want to cry.mp3"),

}

}

function setup() {

createCanvas(320, 260);

// Create the video

video = createCapture(VIDEO);

video.size(320, 240);

video.hide();

flippedVideo = ml5.flipImage(video)

// Start classifying

classifyVideo();

}

function draw() {

background(0);

// Draw the video

image(flippedVideo, 0, 0);

// Draw the label

fill(255);

textSize(16);

textAlign(CENTER);

text(label, width / 2, height - 4);

}

// Get a prediction for the current video frame

function classifyVideo() {

flippedVideo = ml5.flipImage(video)

classifier.classify(flippedVideo, gotResult);

}

// When we get a result

function gotResult(error, results) {

// If there is an error

if (error) {

console.error(error);

return;

}

// The results are in an array ordered by confidence.

// console.log(results[0]);

label = results[0].label;

if(label == "Fear"){sounds[label].play();}

else if(label == "Contempt"){sounds[label].play();}

else if(label == "ControlledAnger"){sounds[label].play();}

else if(label == "SarcasticSmile"){sounds[label].play();}

else if(label == "Derision"){sounds[label].play();}

else if(label == "Pleading"){sounds[label].play();}

else if(label == "BaffledAnger"){sounds[label].play();}

else if(label == "Confusion"){sounds[label].play();}

else if(label == "DeepHatred"){sounds[label].play();}

else if(label == "Humble"){sounds[label].play();}

else if(label == "Curious"){sounds[label].play();}

else if(label == "Tired"){sounds[label].play();}

else if(label == "Bored"){sounds[label].play();}

else if(label == "Happy"){sounds[label].play();}

else if(label == "Shocked"){sounds[label].play();}

else if(label == "Embarrassed"){sounds[label].play();}

else if(label == "Nervous"){sounds[label].play();}

else if(label == "WantToCry"){sounds[label].play();}

// Classifiy again!

setTimeout(function(){ classifyVideo(); }, 1000);

}

Data Challenges: I had twenty micro-expressions, and I uploaded about 200 images for each micro-expression when I did my personal project. In my opinion, there are several reasons that cannot be accurately identified as follows:

1. Each of my 20 micro-expressions is very similar, and their changes are very subtle. My dataset requires distinct characteristics that distinguish each item."

2.Micro-expression communicates through a series of facial motion. One image is not enough for identification. Although the Teachable Machine is able to record a series of images, it can only recognize the image individually but not able to recognize continues images.

3. Maybe upload more images will improve emotion detection. However, Teachable Machine has a limit for uploading too many images. Once I upload 300-400 images for each item, the site page will crash and quit.

4. Real-time Recognition is more complicated than I expect. Unlike images, people's facial expression is continuously changing and can express multiple emotion within a time when learning machine response.